|

I am a third-year Ph.D. student at Caltech, advised by Prof. Georgia Gkioxari and Prof. Yisong Yue. I am also a research intern at the NVIDIA GEAR lab, working with Dr. Jim Fan and Prof. Yuke Zhu. I obtained my M.S. degree from Stanford University, advised by Prof. Fei-Fei Li, Prof. Yuke Zhu, Dr. Jim Fan and Dr. Shyamal Buch. I obtained my B.S. degree from the Hong Kong University of Science and Technology, where I have been lucky to work with Prof. Chi-Keung Tang and Prof. Yu-Wing Tai. My research interests lie in the area of foundation models, robotics, and embodied agents. I am passionate about building embodied foundation agents that are generally capable to discover and pursue complex and open-ended objectives, and understand how the world works through massive pre-trained knowledge. Email / Google Scholar / Twitter / GitHub / LinkedIn |

|

|

|

* Equal contribution, † Equal advising |

|

|

|

We present Eureka, an open-ended LLM-powered agent that designs reward functions for robot dexterity at super-human level. |

|

|

We introduce Voyager, the first LLM-powered embodied lifelong learning agent in Minecraft that continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. |

|

|

We introduce a novel multimodal prompting formulation that converts diverse robot manipulation tasks into a uniform sequence modeling problem. |

|

|

We introduce MineDojo, a new framework based on the popular Minecraft game for building generally capable, open-ended embodied agents. |

|

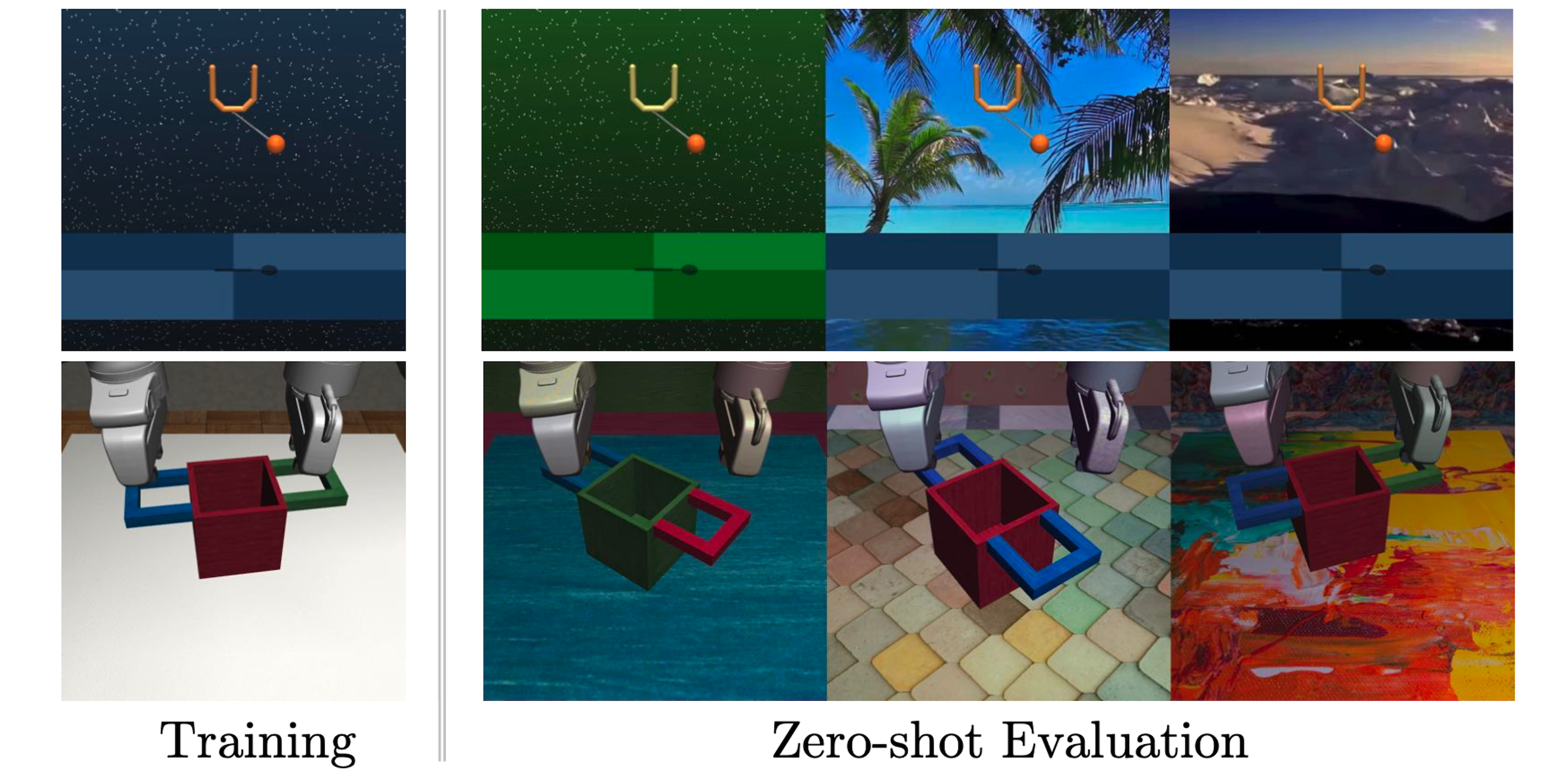

We propose SECANT, a novel self-expert cloning technique that leverages image augmentation in two stages to decouple robust representation learning from policy optimization. |

|

We present iGibson, a novel simulation environment for developing interactive robotic agents in large-scale realistic scenes. |

|

We propose a deep learning-based video matting framework which employs a novel and effective spatio-temporal feature aggregation module. |

|

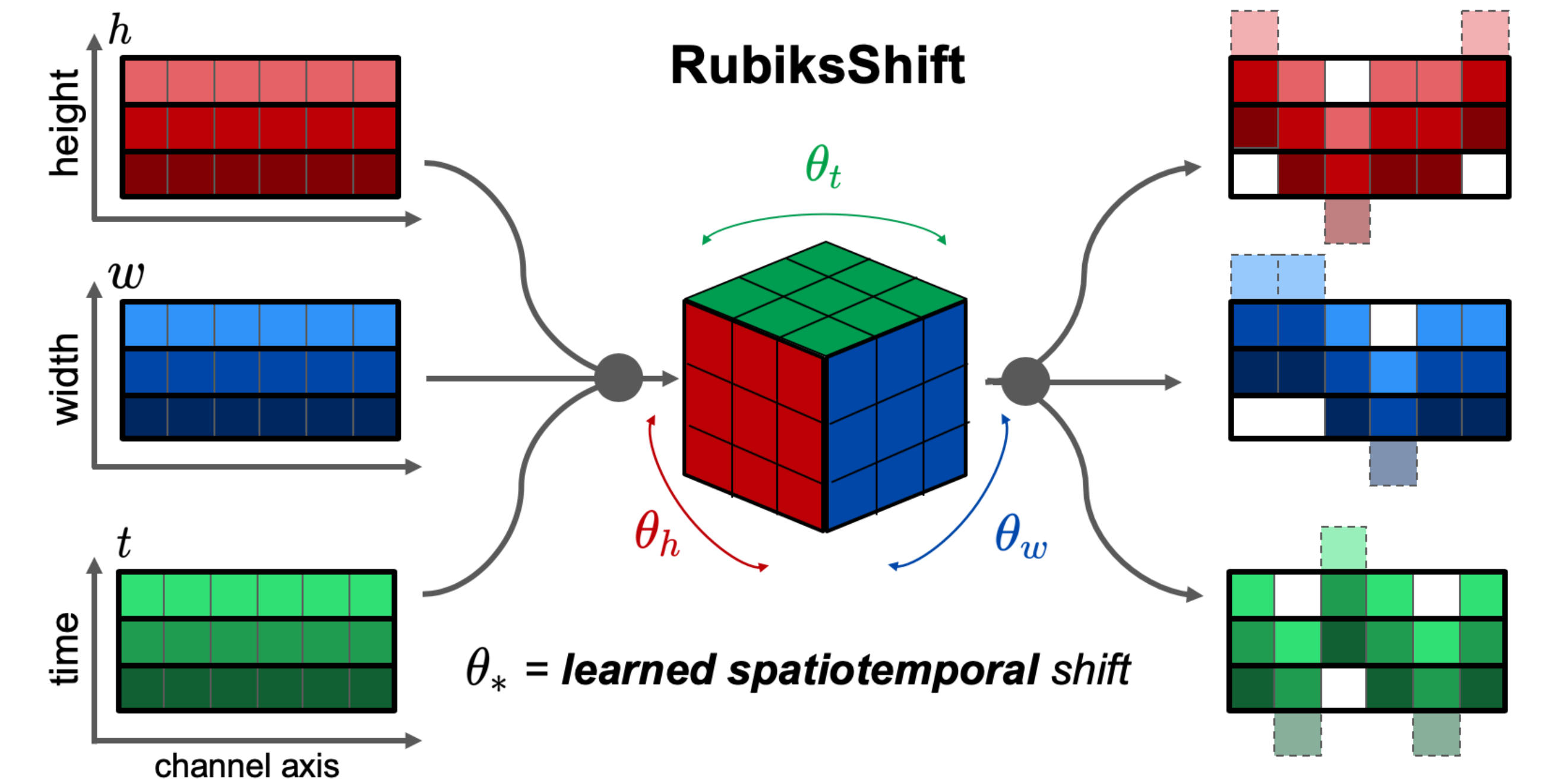

We propose RubiksNet, a new efficient architecture for video action recognition based on a proposed learnable 3D spatiotemporal shift operation (RubiksShift). |

|

We propose a local adversarial disentangling network for facial makeup and de-makeup, using multiple and overlapping local discriminators in a content-style disentangling network. |

|

|

|

|

Caltech CS148: Large Language and Vision Models (Spring 2024)

Teaching Assistant

|

|

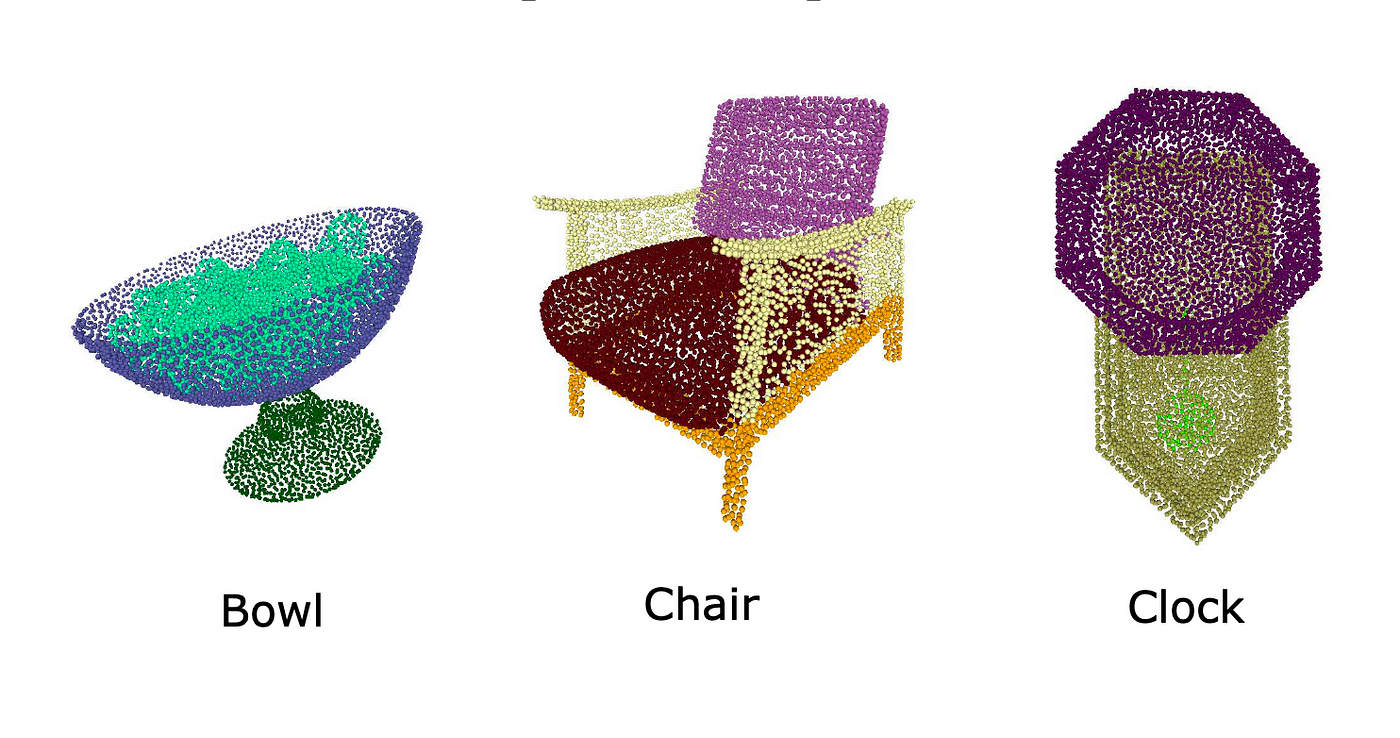

Caltech CS101: 3D Deep Learning (Winter 2024)

Teaching Assistant

|

|

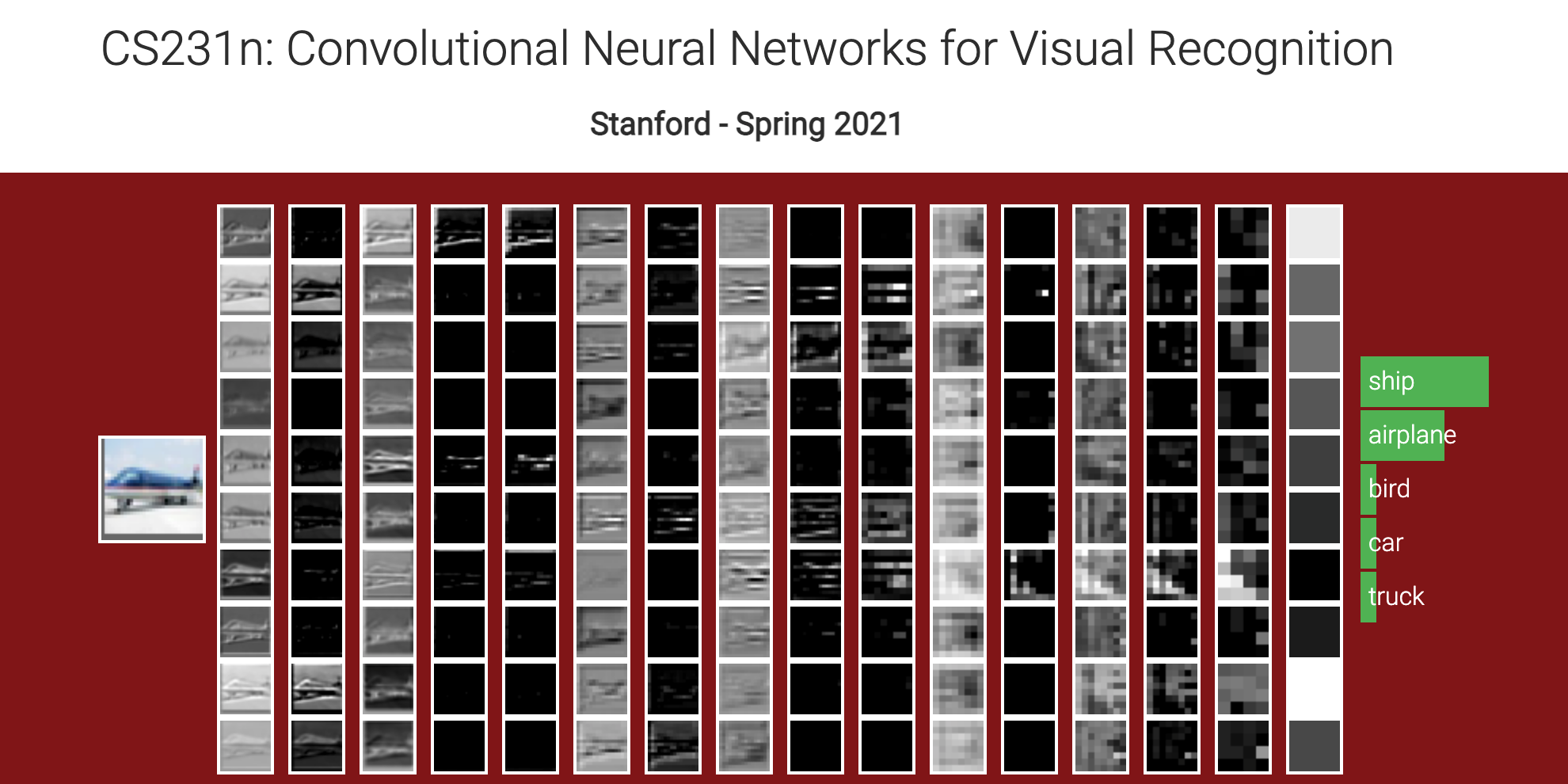

Stanford CS231n: ConvNet for Visual Recognition (Spring 2021)

Teaching Assistant

|

|

Caltech CS165: Foundations of Machine Learning and Statistical Inference (Winter 2023)

Stanford CS129: Applied Machine Learning (Fall 2020)

Stanford CS229: Machine Learning (Spring 2020)

Teaching Assistant

|

|

|

| Conference Reviewer: ICML 2024, ICLR 2024, NeurIPS 2023, NeurIPS 2022, ICLR 2022, ICCV 2021, CVPR 2021, ECCV 2020 |

|

|

|